My Top Picks for the Best Data Analytics Tools You Need

Analytics · 15 Nov 2024

Discover my top picks for the best data analytics tools that can transform your insights into action. Let’s explore what works for you and your projects!

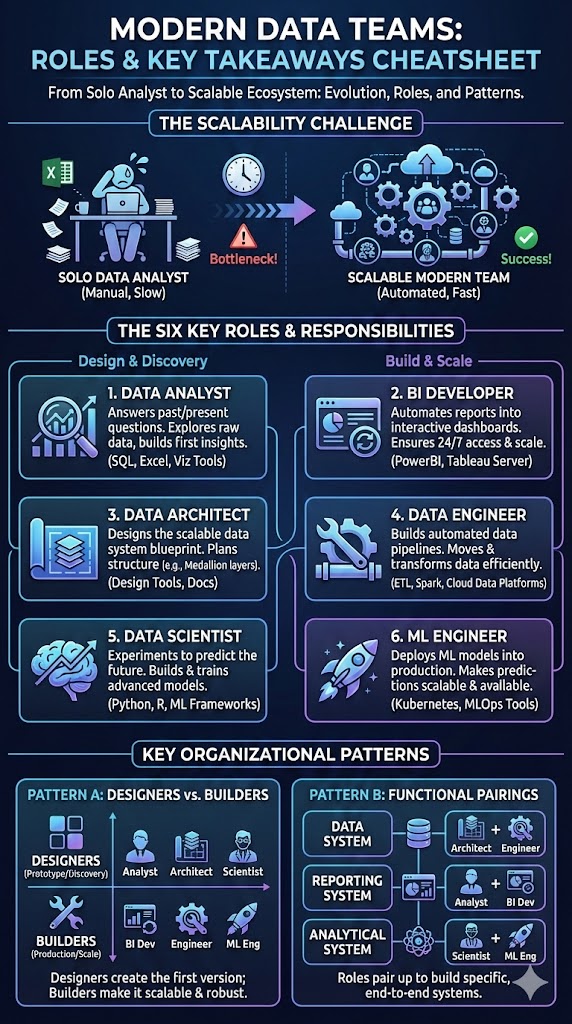

modern data teams guide: roles, lifecycle, tools, governance, hiring — practical tips to build better data teams

analytics · 11 Dec 2025

Modern data teams are cross-functional groups that turn raw data into reliable insights and production features by combining engineers, analysts, scientists, architects, and ML specialists. This matters because your company’s ability to act on data depends less on a single tool and more on how these roles collaborate across ingestion, transformation, analysis, modeling, deployment, and governance. In this article you'll learn what each role does, how the lifecycle flows, which tools form a modern stack, operating models that scale, governance and quality best practices, and practical hiring and career tips to help your team deliver measurable business value.

Ask: who keeps data reliable and available? You rely on data engineers to build ingestion pipelines, manage warehouses or lakehouses, and ensure data freshness. Analytics engineers sit between engineering and analysis: they own semantic models, tests, and transformation code (dbt-style). Actionable tip: implement automated tests (schema, null checks, freshness) in CI so new sources don't break downstream reports.

Imagine getting a question from Sales and shipping an insight within a day; that’s the analyst’s value. Analysts define KPIs, create dashboards, and translate stakeholder needs into queries and visualizations. Example: build a canonical metrics layer to avoid conflicting churn numbers across teams. Actionable tip: pair analysts with a product owner for a two-week sprint to deliver high-impact dashboards.

You need data scientists to discover patterns and validate hypotheses, ML engineers to productionize models, and architects to design scalable schemas and governance. Practical insight: require model cards and performance baselines before production deployment so retraining and rollback are tractable.

| Role | Focus | Key Skills | Deliverable | Example Metric |

|---|---|---|---|---|

| Data Engineer | Reliability & ingestion | SQL, Python, ETL/ELT | Pipelines, schemas | Data freshness (hours) |

| Analytics Engineer | Modeling & transformations | dbt, SQL, testing | Semantic models | Test coverage % |

| Data Analyst | Reporting & insights | SQL, viz, storytelling | Dashboards | Dashboard adoption |

| Data Scientist | Experimentation & models | ML, stats, Python | Models, experiments | Prediction AUC |

| ML Engineer | Deployment & monitoring | APIs, infra, MLOps | Model endpoints | Latency, error rate |

| Data Architect | Platform & governance | Modeling, systems | Platform designs | Cost per TB |

Who does source onboarding? Usually data engineers, but success depends on clear contracts. Start by cataloging sources with owners, frequency, and SLAs. Example: require every new source to include an owner, sample rows, and a retention policy before onboarding. Actionable insight: run a three-week nudged onboarding process that includes a validation checklist and a signed data contract to avoid downstream confusion.

Want reproducible analytics? Use transformation-as-code and shared semantic layers so analysts, scientists, and product teams query the same definitions. Example: adopt dbt for transformations and store canonical metrics in a metrics layer. Actionable steps: enforce pull-request reviews for model changes and keep transformations modular so features can be reused across models.

How do experiments become features? Data scientists prototype models, ML engineers productionize them, and analysts track business impact. Make feedback loops explicit: production model drift triggers a triage runbook and a data quality incident. Actionable tip: integrate model monitoring with alerting and connect alerts to a rotation schedule so someone is always on-call.

Which storage should you pick? Warehouses (Snowflake, BigQuery) excel at analytics, lakes excel at raw capture, and lakehouses combine both. Example: many teams use a lake to land raw events and a warehouse for modeled analytics. Actionable insight: choose a primary compute engine early and design data schemas to minimize cross-system joins.

Need reliable pipelines? Use ELT patterns to load raw data quickly, then transform in the warehouse. Tools like Airflow, Prefect, or managed orchestration automate scheduling and retries. For high-volume events, add streaming with Kafka or Pub/Sub. Actionable tip: centralize scheduling logic and use templated DAGs to reduce duplicated complexity.

Want trustworthy models in production? Use feature stores to enforce consistency between training and serving, model serving frameworks for low-latency inference, and observability tools to track performance drift and lineage. Actionable step: require feature tests that validate training-serving parity before model deployment.

| Layer | Common Tools | Why it helps |

|---|---|---|

| Warehouse/Lakehouse | Snowflake, BigQuery, Databricks | Fast analytics and scalable storage |

| Transformation | dbt, Spark, SQL | Versioned, tested models |

| Orchestration | Airflow, Prefect, Dagster | Reliable scheduling |

| Streaming | Kafka, Pub/Sub, Kinesis | Real-time events |

| Feature Store & MLOps | Feast, Tecton, MLflow | Training-serving parity |

| Observability | Monte Carlo, Datadog, Prometheus | Detect drift & incidents |

Which model fits you? Centralized teams keep expertise and standards in one group, ideal for small-to-medium orgs that need tight governance. Decentralized teams embed analysts and engineers in product teams for speed but risk duplication. Federated models combine both: a central platform team provides tools and standards while domain-aligned squads deliver product-focused analytics. Actionable framework: if you have >5 product teams, move to a federated model with a central platform to avoid duplicated infra costs.

How can you ship reliably? Implement DataOps practices: automated testing for transformations, CI for dbt and ML pipelines, and reproducible environments via containerization. Example checklist: unit tests for SQL models, integration tests for pipelines, and end-to-end smoke tests for dashboards. Actionable tip: enforce PR reviews and automated test gates before merging to production branches.

Want to align priorities? Use a lightweight contract between data and business: define outcomes, success metrics, timelines, and maintenance responsibilities. Example: a quarterly roadmap with committed SLAs for dataset freshness and dashboard delivery builds trust. Actionable step: run a monthly sync between data leads and product managers to re-prioritize based on impact and capacity.

Who owns each dataset? Assign dataset owners and publish metadata in a catalog so you can discover lineage, owners, and usage. Use data contracts to codify expectations (freshness, schema, access). Actionable tip: require dataset owner fields in the catalog and link dashboards back to source tables for traceability.

How do you detect bad data early? Build layered quality checks: unit tests in transformations, monitoring for anomalies, and lineage to find root causes. Example practice: auto-create incidents when row counts or cardinality deviate by >20% versus baseline and assign triage to the owner within one business day. Actionable step: maintain a runbook that maps alerts to who fixes what and how to roll back faulty transformations.

Need to protect sensitive data? Implement role-based access controls, column-level masking, and strict logging. For regulated data (PII, PCI, HIPAA), apply encryption at rest and in transit and keep an audit trail. Actionable advice: use automated scans to tag sensitive fields and enforce masking in downstream environments.

Who to hire first? For early-stage teams hire a strong data engineer and an analytics-focused analyst. Look for: data engineers with ETL/ELT experience, cloud SQL skills, and pipeline testing fluency; analytics engineers comfortable with dbt and testing; analysts with storytelling and SQL fluency; data scientists with experimental design and model validation skills; ML engineers familiar with serving and monitoring; architects with system-level thinking. Soft skills: communication, domain curiosity, and collaboration matter more than spot technical tricks.

Want to retain talent? Create clear ladders tying technical scope to business impact. Offer rotational programs so analysts shadow engineers for a quarter or ML engineers pair with data scientists on model pipelines. Actionable idea: run monthly brown-bag sessions where team members demo a pipeline, dashboard, or model and share lessons learned.

What should you prepare for? Expect more automation in pipeline creation, stronger MLOps practices, and new productivity gains from generative AI for code and analyses. Architects will prioritize modular, observability-first platforms, and teams will emphasize interoperability. Actionable preparation: adopt modular tooling, invest in observability early, and pilot generative AI for templating analyses while safeguarding governance and review processes.

Modern data teams succeed when roles are clear, collaboration is structured, and platforms are reliable and observable. Start by mapping owners and SLAs, invest in transformation-as-code and test automation, and create feedback loops between analysts, scientists, and ML engineers so models and dashboards stay useful. Actionable next steps: (1) run a two-week audit of your top 10 datasets to assign owners and SLAs, (2) enable a CI pipeline for transformations and tests, and (3) pilot a federated model if you support multiple product domains. These steps will help you move faster while reducing downstream incidents and confusion. Remember: modern data teams are about people and processes as much as tools — focus on clear contracts, shared definitions, and continuous learning so your team turns data into consistent business value.

Analytics · 15 Nov 2024

Discover my top picks for the best data analytics tools that can transform your insights into action. Let’s explore what works for you and your projects!

Analytics · 11 Nov 2024

Discover how data-driven decision making empowers agile teams to thrive in a fast-paced world, turning insights into action for real results in everyday projects.

Analytics · 15 Nov 2024

Unlock my favorite data analytics tools that drive my success and learn how they can transform your data into actionable insights for real-world impact.

Analytics · 15 Nov 2024

Discover my favorite data mining tools that transform raw data into actionable insights for effective data analytics and make decision-making a breeze.

Analytics · 19 Sep 2025

data engineering vs data analytics — compare workflows, tools, KPIs; get practical project guidance and next steps.

Analytics · 15 Nov 2024

Discover the top 5 big data analytics tools that have transformed how I approach data. Unlock insights and boost your projects with these game-changing resources.

AI · 08 Nov 2025

AI prompt generator guide: 10 free tools for 2025 and practical workflows to boost your productivity.

Analytics · 09 Sep 2025

Data analytics tools untuk meningkatkan insight bisnis dan keputusan cepat—pelajari tool, perbandingan, dan cara memilih sekarang.

Analytics · 15 Nov 2024

Unlock the secrets of data mining with my favorite tools and techniques that make sense of complex data like finding a needle in a haystack.

Analytics · 15 Nov 2024

Explore my favorite big data analytics tools as I break down their types and real-life applications that can transform how you understand data insights today.